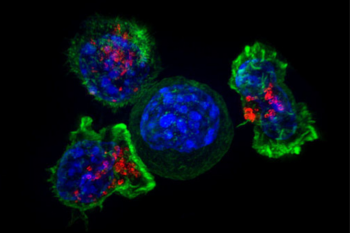

Image Credit

Alex Ritter, Jennifer Lippincott Schwartz and Gillian Griffiths, National Institutes of HealthArticle Author

March 28, 2023

Marios Gavrielatos had never participated in a machine learning competition when he decided to enter the Eric and Wendy Schmidt Center’s Cancer Immunotherapy Data Science Grand Challenge.

Gavrielatos’ friend and colleague, Konstantinos Kyriakidis, asked him to team up in the competition after learning about it from a promotional video on YouTube.

Despite Gavrielatos’ newcomer status, the pair developed a new deep learning model that won them the first part of the competition last month.

The challenge “helped me develop new computational skills, deep-learning wise,” said Gavrielatos, a bioinformatics master’s student at the National and Kapodistrian University of Athens, adding that because they couldn’t find similar problems online, “we had to develop something new ourselves, which was interesting.”

The Cancer Immunotherapy Data Science Grand Challenge, which ran on Topcoder from Jan. 9 to Feb. 3, aimed to uncover new ways to modify, or “perturb,” T cells to make them more effective at killing cancer cells to ultimately improve cancer treatment.

Top challenge submissions will be tested out in a lab at the Broad Institute of MIT and Harvard later this year.

The Eric and Wendy Schmidt Center at the Broad Institute of MIT and Harvard partnered with Harvard’s Laboratory for Innovation Science, the MIT Department of Electrical Engineering and Computer Science, Topcoder, Gordian Biotechnology, and Massachusetts General Hospital (MGH) to run the challenge. Over 900 people registered for the first part of the competition — making it Topcoder’s fifth-largest data science challenge to date.

“In biology, we can perform perturbations on a scale that other fields can only dream of, meaning we need to develop novel machine learning methods to best make use of such data and answer biological questions,” said Caroline Uhler, co-director of the Eric and Wendy Schmidt Center, a core member of the Broad Institute, Principal Investigator in the MIT Laboratory for Information and Decision Systems (LIDS), and professor in the Department of Electrical Engineering and Computer Science and the Institute for Data, Systems and Society (IDSS) at MIT. “We held this data science challenge to direct bright computational minds from around the world to this problem in cancer immunotherapy. And we’re thrilled that we now get to test out some of their proposed perturbations experimentally.”

A great fit for a data science challenge

While chemotherapy and radiation have saved many lives, these treatments have a weak spot: they are not specific enough — meaning they can kill cancerous and healthy cells. The promise of cancer immunotherapy, a newer and effective form of cancer treatment, is that it can harness our immune system to recognize and kill cancer cells while leaving other cells alone in most cases.

Cancer cells have developed a number of ways to evade our immune system. One such strategy is sending signals to T cells to make them exhausted and ineffective at killing cancer cells. That’s why cancer researchers like Nir Hacohen, a director of the Broad Institute’s Cell Circuits Program and director of the Center for Cancer Immunology at Mass General Hospital, are investigating whether perturbing certain genes could shift T cells to a cancer-fighting, “effector” state.

“We were excited to develop this data science challenge with the Eric and Wendy Schmidt Center because the T cell exhaustion problem seemed like a great fit for this kind of competition,” said Hacohen. “It was an opportunity to combine our cancer biology and immunology knowledge with the computational and mathematical skills of machine learning experts from all over the world.”

Marc Schwartz, a postdoctoral fellow in the Hacohen Lab, ran experiments testing the effects of 73 gene knockouts in T cells on mice with cancer. Given that it took months to test a fraction of the 20,000 potential gene knockouts — a genetic perturbation that stops a gene from functioning — Broad researchers wanted a way to zero in on the most promising perturbations. Enter machine learning.

The overarching challenge was divided into three parts that ran as individual data science competitions on Topcoder. In Challenge 1, participants received gene expression data from 66 of the 73 T-cell-gene knockouts from Schwartz’s experiments as training data. They then had to develop an algorithm that could predict how knocking out the seven “held-out” genes would affect T cells.

Challenge 2 participants used their algorithms from the first challenge to propose new gene knockouts (picking from any of the 20K genes in the entire genome) to shift as many T cells as possible into a cancer-fighting state. In Challenge 3, participants proposed a metric for ranking how well a particular gene knockout would bring about this desired shift in T cells.

To solve Challenge 1, winners Gavrielatos and Kyriakidis first pared down the single-cell dataset so that it contained only expression information from important genes — that is, genes whose expression changed across different T cell states. The preprocessing of the data is a crucial step to distill the “signal” — or useful information — when working with such noisy data, said Kyriakidis, who has previously won several precision FDA data science challenges.

The pair next trained a deep learning model to predict what portion of T cells would move into an effector, exhausted, or alternate state after a specific gene was knocked out. Initially, they tried to come up with an algorithm using only the training data provided from Schwartz’s experiment. But as they continued working, they realized that incorporating public biomedical databases into their analysis — namely, Reactome, a database of biological pathways in human cells, and STRING, a protein interaction database — could reveal associations between the missing and observed genes.

“The whole process was so rewarding,” said Kyriakidis. “You have to divide the whole problem into smaller parts to try to find the solution to each part and connect the dots.”

Sometimes, simple algorithms are best

The second place winners were three MIT students — including two graduate students from LIDS, Yuzhou Gu and Anzo Teh, IDSS postdoc Yanjun Han, and undergraduate student Brandon Wang. Teh, who is also an Eric and Wendy Schmidt Center PhD fellow, said his advisor, MIT professor Yury Polyanskiy, who is also a Principal Investigator at LIDS, suggested that he and the other researchers join forces for the challenge.

Teh, Gu, and Han, have a theoretical and computational background — specifically, information theory — while Wang has expertise in computational biology.

“I did feel like this challenge was a good way for me to learn how to work on these types of problems because I’m pretty new to the biology field,” said Teh. Several teams used neural networks to describe the experimental gene expression data, an approach that often requires thousands of parameters to create an effective model. The MIT team, on the other hand, made a simplifying assumption that gene expression could be modeled with a small number of parameters following a Gaussian distribution, or a bell curve.

They then reduced the dimensions of their data from 20,000 to 50 columns using a machine learning technique called “principal component analysis.” The MIT team also incorporated an outside public database on human genes into their model, mapping human gene expression profiles to their missing mouse counterparts. Finally, they used a proven machine learning classification algorithm to determine how the gene expression profiles lined up with T cell states.

“Sometimes simple algorithms can work better than neural networks,” said Teh. The MIT team’s background in information theory, which is the study of organizing and quantifying data, helped them discover what signals in the experimental data to focus their models on.

Peter Novotný, the third place winner and a math professor at the University of Žilina in Slovakia, also took a relatively simple approach to solving Challenge 1. Novotný, a former Topcoder “copilot” who had participated in a NASA asteroid-hunter challenge, among many other competitions, has more of a mathematics than a computer science background. In part through participating in data science challenges, he’s discovered that he enjoys machine learning though.

“And, I also quite like competing,” he said.

For the cancer immunotherapy challenge, Novotný first selected 14 features from the T cell data that quantified how gene expression levels differed between perturbed and unperturbed cells, as the way to represent his training data. Then, he built a model using a common machine learning algorithm — the “random forest” — and predicted the distribution of T cell states for each of the seven withheld genes.

To make the challenge accessible to participants without a biology background, Lightmark Creative and Orr Ashenberg, associate director of computational biology at The Klarman Cell Observatory of the Broad Institute, produced a 1.5-hour crash course on cancer biology, perturbation data, and single-cell sequencing technologies.

“To compete in this contest, you really need to understand what the data is, and without those lectures, it would be quite difficult to understand the problem,” said Novotný.

In addition, Uhler held an IAP course that ran at the same time as the challenge, encouraging MIT students to team up and participate in the competition.

Testing perturbations in the lab

The Eric and Wendy Schmidt Center also announced last month who won the third challenge, in which participants came up with a metric to rank new T cell perturbations. The winners of that challenge were:

- First place: Dariusz Brzeziński and Wojciech Kotlowski from Poznań University of Technology in Poland

- Second place: Salil Bhate, MIT, postdoctoral fellow at the Eric and Wendy Schmidt Center

- Third place: Irene Bonafonte Pardàs, Artur Szalata, and Benjamin Schubert from Helmholtz Center Munich and Miriam Lyzotte from Mila - Quebec AI Institute

Now, researchers at the Hacohen Lab will run experiments to test how the perturbations proposed in Challenge 2 affect mouse T cells’ cancer-fighting abilities.

“It will be really exciting to see how these computationally identified perturbations actually perform in the lab,” said Uhler. “After all, machine learning cannot replace experiments, but the goal is to work hand in hand with biologists and help prioritize the next experiments to run.”